How Raygun increased transactions per second by 44% by removing Nginx

Posted Jul 31, 2020 | 5 min. (963 words)Here at Raygun, improving performance is baked into our culture. In a previous blog post, we showed how we achieved a 12% performance lift by migrating Raygun’s API to .NET Core 3.1.

In publishing this, a question was asked on Twitter as to why we still use Nginx as a proxy to the Raygun API application. Our response was that we thought this was the recommended approach from Microsoft. It turns out this has not been the case since the release of .NET Core 2.1. Kestrel has matured a lot since the .NET Core 1.0 days when we first started using it and the security experts at Microsoft are comfortable with Kestrel being used on the front line since the release of .NET Core 2.1.

Why remove Nginx (and why keep it)?

There are still cases where you’d want to use a proxy like Nginx which we have listed below. In Raygun’s case, our API servers only host the one application and are only exposed to the internet through a load balancer. This means the restrictions on port sharing don’t apply to us and the servers exposed public surface has already been minimized.

Some of the reasons you may want to use a proxy (outlined by Microsoft) would be:

- To limit the exposed public surface area of the apps that it hosts

- Provide an additional layer of configuration and defense

- Might integrate better with existing infrastructure

- Simplify load balancing and secure communication (HTTPS) configuration. Only the reverse proxy server requires an X.509 certificate, and that server can communicate with the app’s servers on the internal network using plain HTTP.

Business outcomes

For our API nodes, removing Nginx from the configuration allows us to handle more volume at no additional costs.

We also saw a significant improvement in the average and 99th percentile response time over the load test. This means requests to the API from our customers are faster and allow them to send more data in less time.

Since putting the new server configuration into production we’ve also seen a significant decrease in 5xx errors reported by our load balancer. We’re now handling the full client load with fewer errors experienced by our users.

How we tested .NET Core performance

Tests were run using a c5.large AWS instance running Ubuntu 18.04. The baseline machine is running Nginx acting as a proxy to the Kestrel web server on the same machine and on the comparison machine requests are handled directly by Kestrel.

We used Apache JMeter to post sample Raygun Crash Reporting payloads to the API. JMeter can simulate a very high load with many concurrent requests. We tweaked this to the point where each machine started to max out the CPU, but before it got overloaded and was not able to handle all requests (so 100% success rate for the requests).

JMeter ran multiple 10-minute tests, where it measures each of the requests and generates a summary report at the end of each run. We then averaged the results of the multiple test runs to come up with the final results below.

The results from removing Nginx

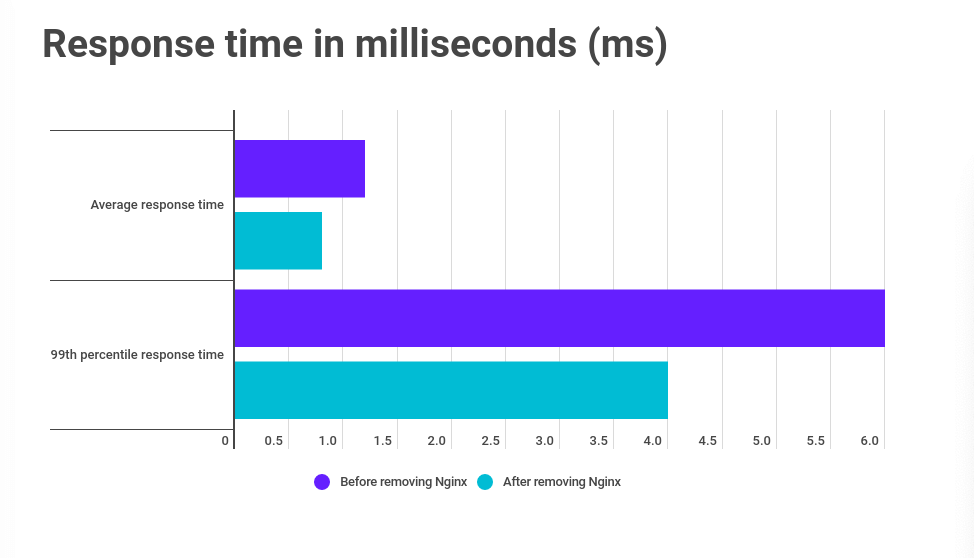

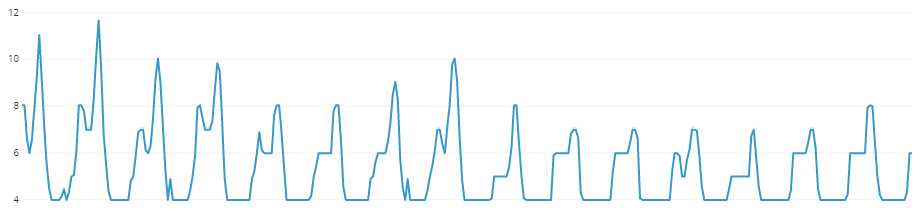

Response time in milliseconds (ms)

Average response time reduced (lower is better) from 1.2ms to 0.8ms, which equates to a 33% decrease. 99th percentile response time reduced from 6ms to 4ms, which equates to a 33% decrease.

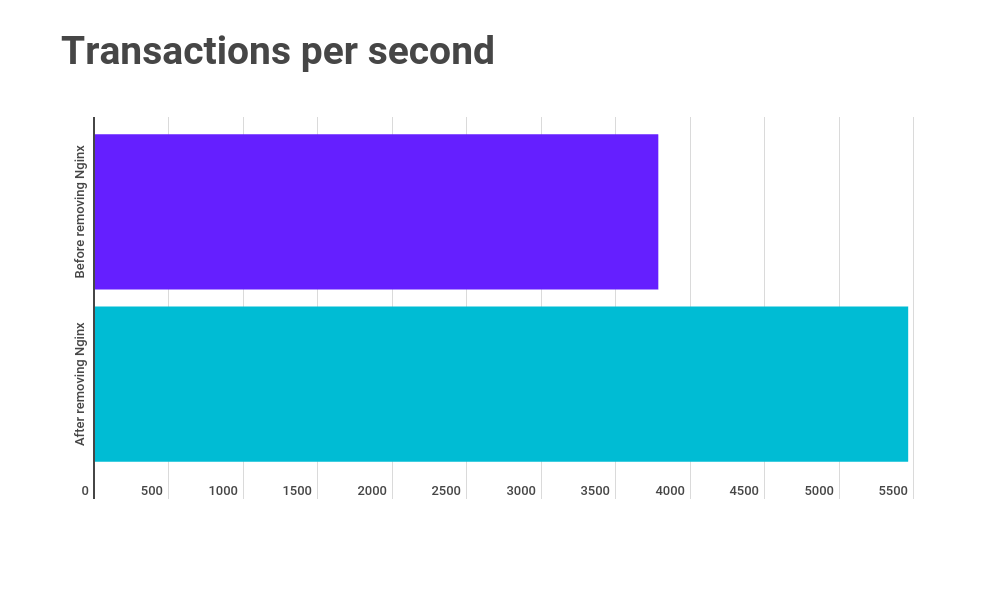

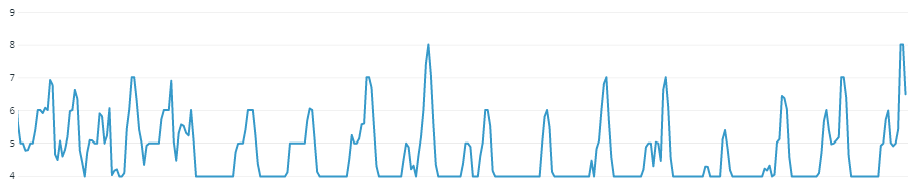

Transactions per second

Transactions per second increased (more is better) from 3,783 to 5,461, which equates to a 44% increase.

Observations from running the new servers in production

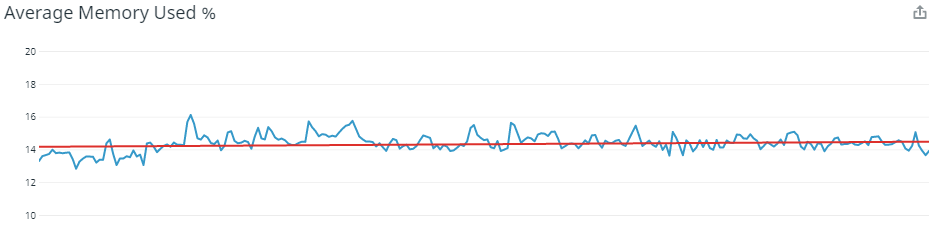

Memory usage

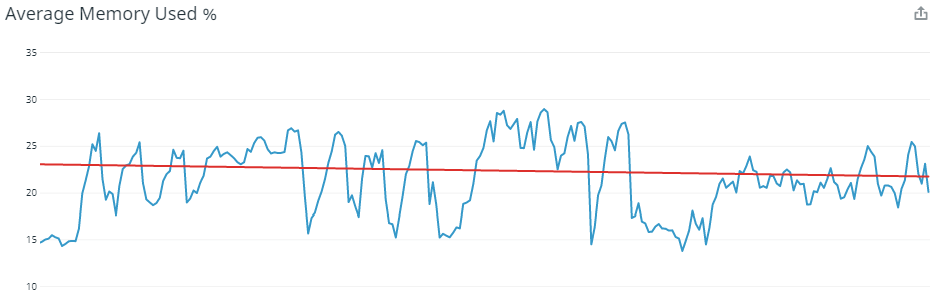

When running instances with Nginx, the average memory used per instance was pretty consistent, hovering between 13% and 16%. Since the removal of Nginx, we have seen average memory usage increase with a much wider variation, now running between 15% and 30% with the trend around 22%. We believe this is because we were hitting a limit with Nginx which was limiting the number of requests being handled by Kestrel. So Kestrel was processing a consistent rate of requests meaning there was little variation in memory usage. With this bottleneck removed, we now see more memory usage and variation as Kestrel handles a varying number of requests.

Nginx + Kestrel:

Kestral only

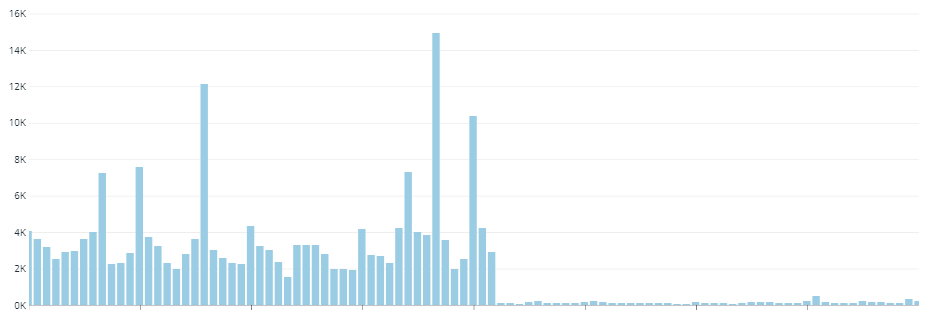

Number of active nodes

The average number of active nodes has dropped from 5.35 hosts to 4.66 hosts. We now see longer periods running our minimum of four servers and at peak periods we’re running fewer servers.

Nginx + Kestrel:

Kestral only

Load balancer 5xx error rate

For a while, we have seen a fairly high 5xx error rate reported through our load balancer stats that weren’t errors from our application and were not being reported as crash reports in Raygun. It turned out that these errors were coming from Nginx and by removing this proxy we are now handling the full load better resulting in a significant reduction in errors.

Overall, due to the volume of requests we receive, even the higher numbers below are a tiny percentage of the total requests we handle. It’s positive to note a significant reduction by removing the Nginx layer. This isn’t a criticism of Nginx, it is possibly a configuration issue at our end, however, simplifying our setup seemed to resolve the issue.

5xx error rate

5xx error rate

Closing thoughts

It’s great to question original assumptions about performance, and at Raygun, we aren’t afraid of “pulling on a thread” to see where it gets us. As we scale our infrastructure, being able to handle more data for less cost presents some serious business wins, and it all started from someone asking a simple question; “why?”

It’s worth a note that the .NET team is constantly working on performance improvements. Even though .NET 5 isn’t scheduled for release until November this year, there is already a myriad of significant updates available.

![.NET Core or Node.js? [We increased throughput by 2,000%] featured image.](png/net-raygun-um.png)