3 Serverless error monitoring strategies for 2020

Posted Jan 29, 2019 | 7 min. (1439 words)This article was last updated in January 2020

If you’re thinking of running a serverless setup, congratulations! But that means you’re running a distributed system.

With all the benefits and simplicities that come with going serverless comes this tradeoff: You potentially also have some increases in complexity.

Distributed systems are, by their nature, complex. With partitions, you’re communicating over a network that’s potentially unreliable and can’t be trusted. Which means that monitoring your application errors is going to be especially important.

Today, we’re going to talk through some different strategies for monitoring errors. We’ll also look at areas you should be thinking about to get effective coverage of your serverless error metrics.

What is serverless?

Before we go any further, we need a quick definition. Serverless is a paradigm applied to cloud compute. Most applications require at least some compute capacity. Compute is the workhorse of your application stack. It’s used for handling requests, manipulating data for inserting into a database, sending messages, and more.

Traditionally, compute resources are provisioned in the cloud by virtue of having a machine running. This is typically a Linux server or something similar. However, this leads to being over-provisioned and having to perform additional maintenance on your running machine by applying patches, managing memory, etc.

With serverless, you don’t have to manage the underlying resource, the host machine. You simply run “functions,” which are small units of compute on an on-demand basis. In fact, this bypasses the need to run a host machine at all.

However, with a fine-grained application composed of functions, it’s vitally important to know what’s happening under the hood. You’ll need to know how your functions are performing, and whether they’re error-ing and why, so you can ensure a great user experience for your application. This is where serverless comes in.

Error monitoring in a serverless world

Serverless brings with it a few differences in how we might want to handle errors within our application. For instance, as mentioned, serverless is by default distributed. This means our system’s operating over a network. Network calls can’t be trusted, and we must assume that at some point they’re going to fail, be slow, or behave in a way we didn’t expect.

With serverless, you also don’t have access to your host machine. Hosts can be useful for error handling as you can install an agent—which is a process on your host that streams data about your application to some central location. But since serverless doesn’t allow for this type of model, we need to find a workaround for this issue.

When it comes to error handling, to get a good picture of our system we should look to gather and invest in three main sources of data:

Infrastructure data: This will show how the function’s performing on an aggregate level.

Logging: This shows what data our application will emit, for the purposes of debugging.

APM (Application Performance Monitoring): This gathers data such as call stacks from our application.

Sound good? Let’s dive deeper.

1. Infrastructure monitoring

To effectively monitor your serverless setup, you’ll need data on your serverless infrastructure. While you don’t need as much data as you would in a server world, you’ll still want insight into what’s going on inside your application. Most cloud providers will give you access to this data out of the box with some basic dashboard-type tooling. In addition, there are plenty of third-party applications that will let you chop up and manipulate the data however you see fit.

What really matters when it comes to monitoring your serverless infrastructure? These are the main metrics to track:

- Errors Meaning the count of times your function failed to fully execute.

- Duration How long is your function taking to run? Is it above or below what’s expected?

- Invocations How often is your function being executed?

With this data you’ll want to think about what “normal” looks like for your application so you can set up monitoring and alerting. Under what circumstances do you need to be alerted to your system’s functioning? If a percentage of your functions are failing, maybe? There’s no simple answer to this question, but you can figure it out by collecting historic data and understanding the non-functional requirements for your application.

2. Logging

With your infrastructure tooling set up, the next part you’ll want to get in place is your logging tooling. Logging is the practice of writing out messages of data throughout your application to record when certain events happen, such as the failure of a network call and the events-associated metadata. Logs, if used well, can give you deep insights into how your serverless stack is performing.

Here are some best practices for logging:

Log with levels

Using log levels lets you filter your logs by how much data you want to receive. You can also set up your log levels so you can choose to omit different, more verbose messages to save space or money in your logging storage system.

Centralize them

It used to be a common practice to manually go into a server to access the live running logs and run search commands on your log files directly. However, this approach is naive. Viewing logs manually is a painful, reactive process. Instead, it makes sense to send over logs from all your running hosts and infrastructure into a single place. When centralized, your logs can be analyzed, graphed, and tracked.

Structure them

To get the most out of your logs, structure the data within them. That means you can emit logs in a standard format, be that JSON or some other structure such as one delimited by pipes. Which format you use doesn’t matter, as your tooling can usually help you handle different formats. Just be consistent and make sure your logs are rich with data.

Correlation IDs

One more thing: An important part of logging in a distributed system is leveraging a correlation ID. This is a piece of metadata that you’d augment your log messages with so you can correlate different events within your system. With correlation IDs, you can visualize a request bouncing around within your application and more easily diagnose issues.

With your logging, it helps to reverse-engineer the picture of the system you want. When building functionality, you’ll want to ask yourself: What picture of the system do we need from this part of our application? What questions do we want to ask? What type of visualizations or views of the system do we require?

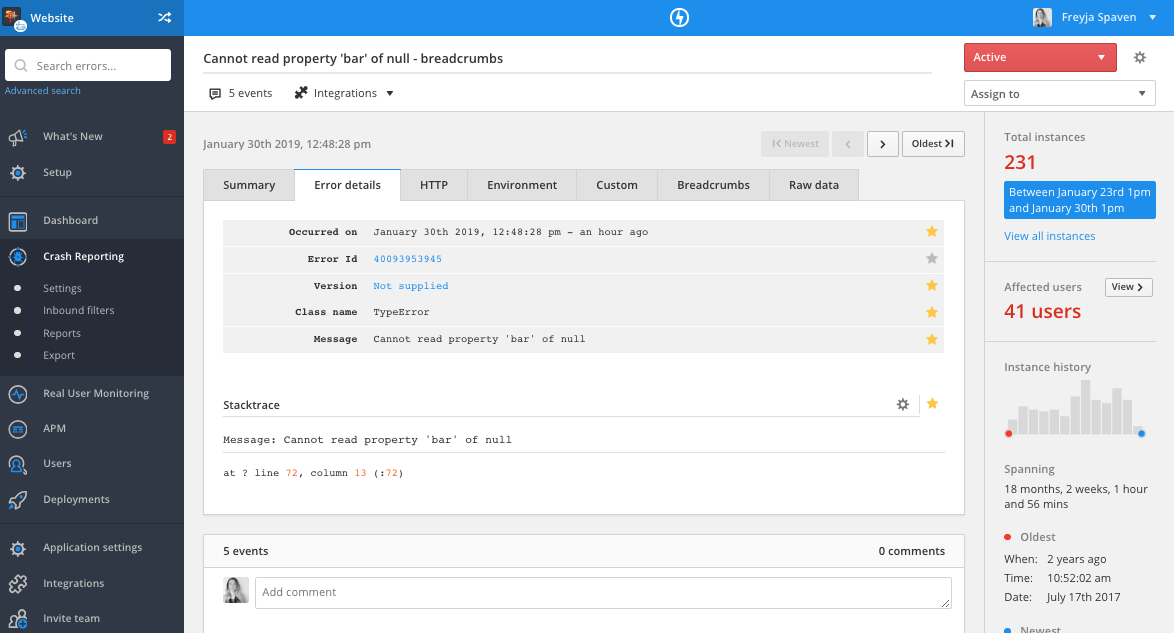

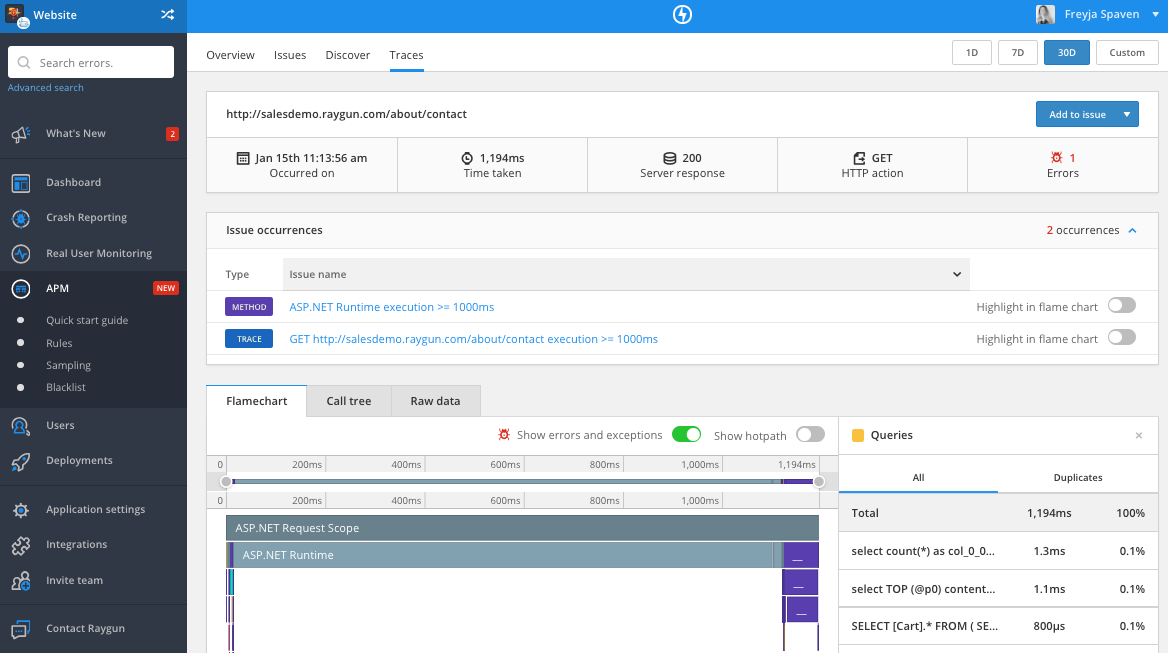

3. APM

APM stands for application performance monitoring. Confusingly, APMs often refer to many different types of tools that let you monitor your application. APM tooling often has the aforementioned logging and infrastructure metrics built in. In this instance, though, I’m talking about a specific part of the APM related directly to your application and your errors and stack traces.

APM tooling often lets you embed within your application code snippets of code that catch and store your exceptions. Unlike logging, this process is usually implicit, so you don’t have to add a log entry in each part of your application to receive data about your errors. If an unhandled error occurs, your APM will catch its stack trace, provided you have your tooling set up.

If this APM data is handled in the same place as your infrastructure and logging metrics, you have a recipe for very powerful observability. When you’re catching your call errors and call stacks, you can correlate these with data from your hosts: Did you run out of memory? Were your functions taking a long time to process? What log entries happened in the run-up to your error? Is the error only limited to a single user or to a different demographic?

Making the invisible visible

Hopefully this post gives you a place to start when it comes to monitoring your serverless application. Begin with the three main areas: Infrastructure monitoring, logging, and instrumenting with APM tooling.

When running distributed systems, we have to be proactive about our system visibility. Your application needs to be ready to handle as many erroneous situations as possible, and in the worst case scenario, it should give you the data you need to remedy the situation.

Covering the three bases of your infrastructure, application monitoring, and logging should get you off to a great start. The earlier in your application’s life you can get these installed the better, as you can refine them over time as you learn more about your application and its characteristics.

When operating in a distributed systems world like serverless, it’s not if an error will occur, but when. But now you’re prepared!